Subscribe and Visit Us I am going to show you how to #webscraping #dataextraction news articles from news media step by step. Press release - Big Market Research - How Covid-19 Is Reshaping Web Scraper Software Market? Global Key Players: Kuaiyi Technology, Diggernaut, Octopus Data, PilotFish & more - published on openPR.com. Jan 25, 2021 Their free online web scraper allows scraping PDF version document. They have another product under Scraper Wiki called Quickcode. It is a more advanced Scraper Wiki since it is more programming environment with Python, Ruby, and Php, #4 Dexi.io. Cloud Scraping Service in Dexi.io is designed for regular web users.

Thursday, January 28, 2021A web scraping tool can automate the process of repetitive copying and pasting. Actually, Google sheets can be regarded as a basic web scraper. You can use a special formula to extract data from a webpage, import the data directly to google sheets and share it with your friends.

In this article, I will first show you how to build a simple web scraper with Google Sheets. Then I will compare it with an automatic web scraper, Octoparse. After reading it, you will have a clear idea about which method would work better for your specific web scraping needs.

Option#1: Build an easy web scraper using ImportXML in Google Spreadsheets Ps remote play fire tv.

Step 1: Open a new Google sheet.

Step 2: Open a target website with Chrome. In this case, we choose Games sales. Ps4 remote play no download. Right-click on the web page and it brings out a drop-down menu. Then select 'inspect'. Press a combination of three keys: 'Ctrl” + “Shift” + “C' to activate 'Selector'. This would allow the inspection panel to get the information of the selected element within the webpage.

Step 3: Copy and paste the website URL into the sheet.

Option#2: Let's try to grab price data with a simple formula: ImportXML

Step1: Copy the Xpath of the element. Select the price element, and Right-Click to bring out the drop-down menu. Then select “Copy”, choose “Copy XPath”.

Step 2: Type the formula to the spreadsheet.

=IMPORTXML(“URL”, “XPATH expression”)

Note the 'Xpath expression' is the one we just copied from Chrome. Replace the double quotation mark ' ' within the Xpath expression with a single quotation mark'.

Octopus Web Scraper Reviews

Option#3: There's another formula we can use:

=IMPORTHTML(“URL”, “QUERY”, Index)

With this formula, you extract the whole table.

Now, let's see how the same scraping task can be accomplished with a web scraper, Octoparse.

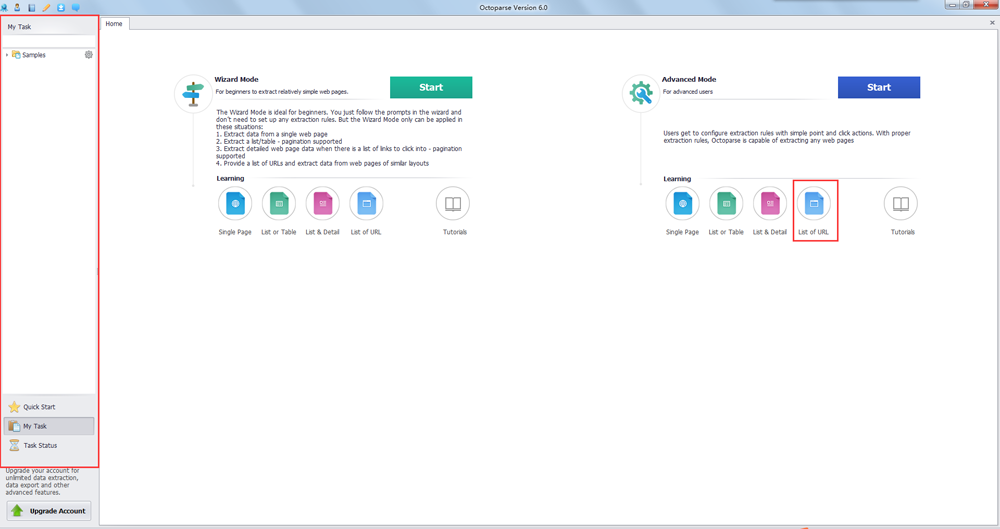

Step 1: Open Octoparse, build a new task by choosing “+Task” under the “Advanced Mode”

Step 2: Choose your preferred Task Group. Then enter the target website URL, and click 'Save URL'. In this case: Game Sale website http://steamspy.com/

Step 3: Notice Game Sale website is displayed within Octoparse interactive view section. We need to create a loop list to make Octoparse go through the listings.

1. Click one table row (it could be any file within the table) Octoparse then detects similar items and highlights them in red.

2. We need to extract by rows, so choose “TR” (Table Row) from the control panel.

3. After one row has been selected, choose the “Select all sub-element” command from the Action Tips panel.

Choose “Select All” command to select all rows from the table.

Step 4: Choose “Extract data in the loop” to extract the data.

You can export the data to Excel, CSV, TXT or other desired formats. Whereas the spreadsheet needs you to physically copy and paste, Octoparse automates the process. In addition, Octoparse has more control over dynamic websites with AJAX or reCaptcha.

Artículo en español: Simple Scraping con Google Sheets 2020 actualizado

También puede leer artículos de web scraping en el Website Oficia

More Resources:

Monday, January 25, 2021

The ever-growing demand for big data drives people to dive into the ocean of data. Web crawling plays an important role in crawl the webpages that are ready to be indexed. In nowadays, the three most major ways for people to crawl web data are - Using public APIs provided by the websites; writing a web crawler program; Using automated web crawler tools. With my expertise in web scraping, I will discuss four free online web crawling (web scraping, data extraction, data scraping) tools for beginners’ reference.

Ultimate guitar pro. Your #1 source for chords, guitar tabs, bass tabs, ukulele chords, guitar pro and power tabs. Comprehensive tabs archive with over 1,100,000 tabs! Tabs search engine, guitar lessons, gear reviews. Ultimate guitar com. Tabs Articles Forums Wiki + Publish tab Pro. 21,123 fresh tabs total. All Official Chords Tab Guitar Pro Power Bass Drums Video Ukulele. Learn how to play your favorite songs with Ultimate Guitar huge database. Guitar, guitar pro, bass, drum tabs and chords with online tab player. An Ultimate Guitar Pro review covering all the features of the song learning software from one of the most popular guitar tab websites.

A web crawling tool is designed to scrape or crawl data from websites. We can also call it web harvesting tool or data extraction tools (Actually it has many nicknames such as web crawler, web scraper, data scraping tool, spider) It scans the webpage and search for content at a fast speed and harvest data on a large scale. One good thing comes with a web crawling tool is that users are not required to process any coding skills. That said, it supposes to be user-friendly and easy to get hands-on.

Octopus Deployment

In addition, a web crawler is very useful for people to gather information in a multitude for later access. A powerful web crawler should be able to export collected data into a spreadsheet or database and save them in the cloud. As a result, extracted data can be added to an existing database through an API. You can choose a web crawler tool based on your needs.

#1 Octoparse

Octoparse is known as a Windows and Mac OS desktop web crawler application. It provides cloud-based service as well, offering at least 6 cloud servers that concurrently run users’ tasks. It also supports cloud data Storage and more advanced options for cloud service. The UI is very user-friendly and there are abundant tutorials on Youtube as well as the official blog available for users to learn how to build a scraping task on their own. And customer stories are available to get an idea of how web scrpaing enhances businesses.

#2 Import.io

Import.io provides online web scraper service now. The data storage and related techniques are all based on Cloud-based Platforms. To activate its function, the user needs to add a web browser extension to enable this tool. The user interface of Import.io is easy to get hands on. You can click and select the data fields to crawl the needed data. For more detailed instructions, you can visit their official website. Through APIs, Import.io customizes a dataset for pages without data. The cloud service provides data storage and related data processing options in its cloud platform. One can add extracted data to an existing database.

#3 Scraper Wiki

Scraper Wiki’s free plan has a fixed number of datasets. Good news to all users, their free service provides the same elegant service as the paid service. They have also made a commitment to providing journalists premium accounts without cost. Their free online web scraper allows scraping PDF version document. They have another product under Scraper Wiki called Quickcode. It is a more advanced Scraper Wiki since it is more programming environment with Python, Ruby, and Php,

#4 Dexi.io

Cloud Scraping Service in Dexi.io is designed for regular web users. It makes commitments to users in providing high-quality Cloud Service Scraping. It provides users with IP Proxy and in-built CAPTCHA resolving features that can help users scrape most of the websites. Users can learn how to use CloudScrape by clicking and pointing easily, even for beginners. Cloud hosting makes possible all the scraped data to be stored in the Cloud. API allows monitoring and remotely managing web robots. It’s CAPTCHA solving option sets CloudScrape apart from services like Import.io or Kimono. The service provides a vast variety of data integrations, so that extracted data might automatically be uploaded thru (S)FTP or into your Google Drive, DropBox, Box or AWS. The data integration can be completed seamlessly. Apart from some of those free online web crawler tools, there are other reliable web crawler tools providing online service which may charge for their service though.

Octopus Web Scraper Download

Artículo en español: Gratis Web Scraping Herramientas en Línea

También puede leer artículos de web scraping en El Website Oficial

Web Scraper Python

More Resources:

Comments are closed.